At HacknRoll 2020, my team built smart glasses using computer vision to help the visually impaired recognize emotions, placing in the Top 8 out of over 120 teams.

The Project: SEENSE

We developed SEENSE—smart glasses that use computer vision and emotion recognition to help visually impaired individuals and those with autism spectrum disorder understand the emotions of people around them. The project targeted two groups: the 285 million visually impaired individuals worldwide and people with autism spectrum disorder. "1 in 160 children has an autism spectrum disorder" according to WHO statistics.

Beyond the visually impaired, the solution addresses individuals with autism spectrum disorder and Asperger's syndrome who struggle interpreting social cues.

The smart glasses provide audio feedback, helping users understand others' emotions. We emphasized this was "not as a replacement for their current methods of perception, but as an augmentation."

Technical Implementation

The SEENSE prototype in action.

The SEENSE prototype in action.

The system comprises a Raspberry Pi 4 with a camera module, buzzer, software (Python), and a button for actuation. When users press the button, the device captures an image, sends it to an API for emotion analysis, and provides feedback through audio or morse-coded piezo buzzer signals.

We implemented dual feedback mechanisms—audio through text-to-speech and morse-code-encoded piezo buzzer tones accessible to deaf-blind users.

Key Design Decisions

We deliberately chose a button interface over voice commands because "a voice interface wouldn't be the best solution to the input problem as it would interrupt the flow of a conversation." This design decision emerged from collaborative discussions with hackathon mentors and community members.

Technical Challenges

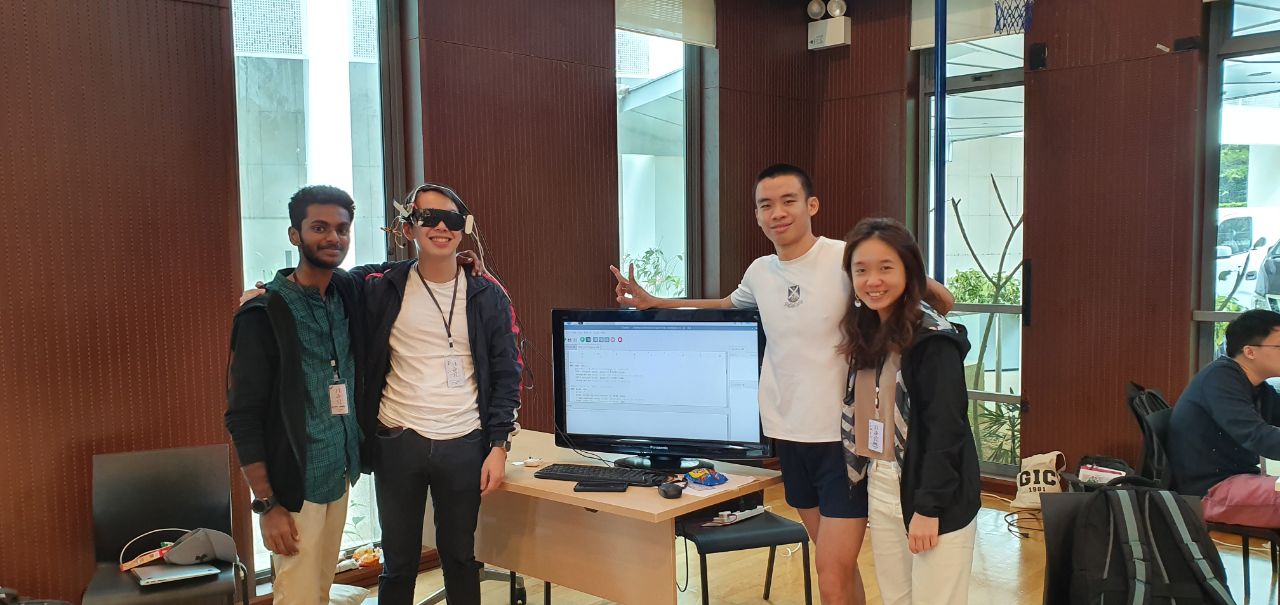

The team working on the prototype during the hackathon.

The team working on the prototype during the hackathon.

Initial constraints included unavailable Raspberry Pi Zero camera connectors and missing haptic buzzers. We had some initial trouble connecting the Raspberry Pi 4 and flashing the SD to an appropriate version of RPi. The team received assistance from NUS Hackers and MLH organizers to overcome these setup difficulties.

Hardware limitations prevented using a Raspberry Pi Zero due to missing camera ribbon connectors. We also substituted a piezo buzzer for an ideal haptic buzzer.

Accomplishments

Top 8 placement at HacknRoll 2020.

Top 8 placement at HacknRoll 2020.

The team successfully integrated hardware components, demonstrated the portable prototype throughout the hackathon, and learned to seek help effectively from organizers and mentors. The experience provided valuable exposure to Raspberry Pi hardware integration and breadboard connections for first-time hardware hackers, along with deeper understanding of challenges faced by visually impaired individuals.

Future Directions

The team plans to implement offline emotion detection using OpenCV, reduce the form factor by potentially using microcontrollers, and expand applications to include obstacle detection and avoidance, as well as navigation through common routes.

Potential improvements include edge-based computer vision eliminating API dependency, form factor reduction, haptic feedback integration, and partnerships with API providers and hardware manufacturers for commercialization.